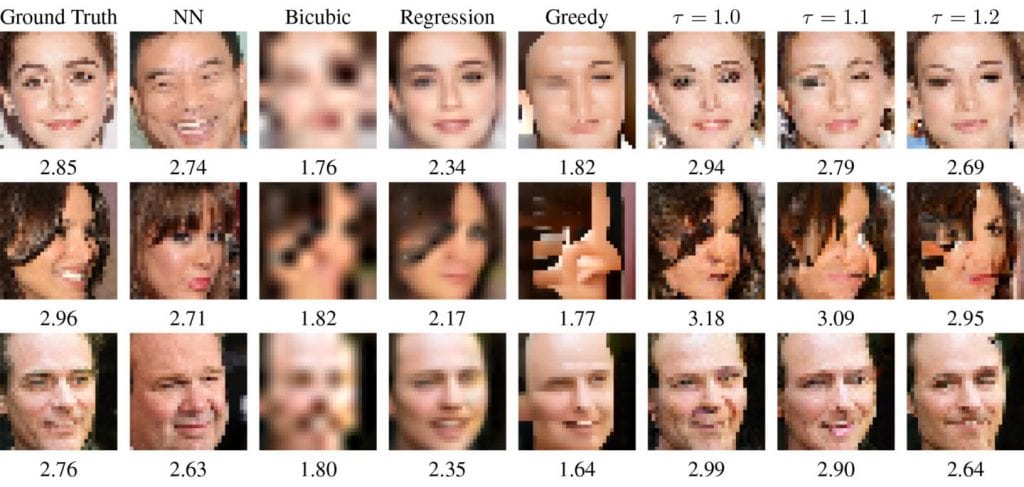

The new system, which has been christened Pixel Recursive Super Resolution, has the ability to synthesize realistic details in the photos and improve their resolution. A low-resolution image may correspond to multiple high-resolution images, and what the platform does is make an enlargement using the assumptions of a rendering model. The software uses artificial intelligence to define the optimal alternative and improvement through two neural convolutional networks. Its operation is as follows: starting from pixelated images of a size of 8 x 8 px, first the program of Google Brain uses the “conditioning network”, by comparing reference photography with other high resolution, to reduce them to the size of the original and to test if a correspondence occurs.

Second, the “previous neural network” uses a PixelCNN architecture with the aim of adding realistic, high-resolution details to the image. To do this, the system makes assumptions by analyzing a database of photographs of the same type of object. In the tests, to obtain the additional information of faces made use of a file of photos of celebrities, and to carry out the reconstruction of a room studied photographs of others of similar characteristics. Finally, to create the ultimate super resolution image, the assumptions of the two neural networks merge, resulting in fairly realistic photographs of larger size and quality. Although this is not a real photo, but an approximation obtained through the processing of images, this technology can be very useful in surveillance, in forensic medicine, and in other areas.

Δ

![]()